Cover Story

Trends and drivers in advanced driver assistance systems (ADAS) and autonomous tech

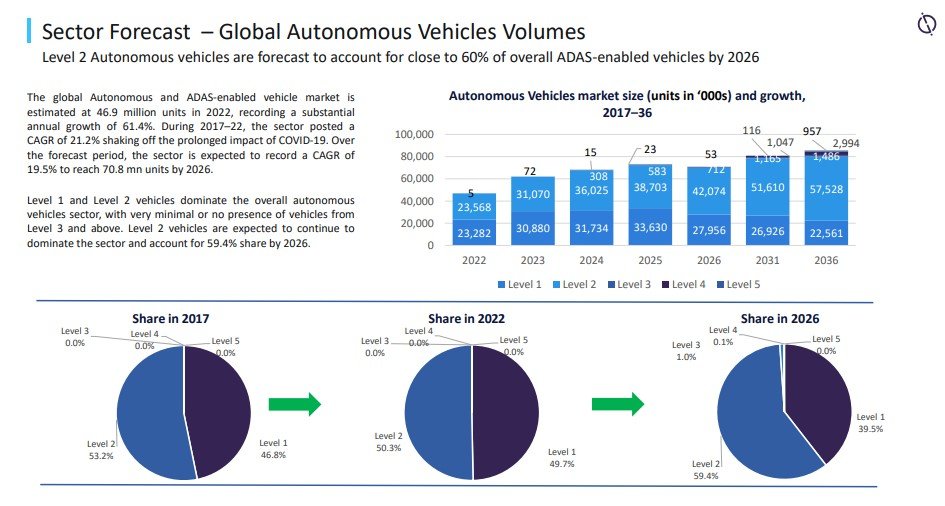

According to GlobalData the ADAS/autonomous vehicle sector (Level 1 and upwards) is forecast to register a CAGR of 19.5% over the 2021–26 period.

Credit:

Development of Artificial Chips

For Level 1 or 2 ADAS-assisted vehicles, the average new car has $500 worth of chips. For the typical smartphone, the chip content is just $60. Radiofrequency and baseband chips, sensors, microcontrollers, and potent central processing units (CPUs) to process vision interpretation data are just a few of the various sizes and shapes of these chips. The demand for chips from the automotive industry will increase significantly over the next couple of years.

According to some higher estimates, a vehicle's software and content will account for 80% of its value by 2030, with AI eroding the software's market share while also enabling precise targeting and content customization. Machine learning based on neural networks will become a common auto part. Among others, Tier-1 auto parts supplier Continental asserts that neural nets have been incorporated into various parts of its systems. A crucial component of auto brains will be the creation of algorithm-specific AI chips that (as closely as possible) combine processing and memory. The core of the developing self-driving phenomenon is the capacity of vehicles to educate and develop themselves with every mile they travel.

The leading companies are Tesla, Nvidia, Intel, and Waymo. The latter's Autopilot hardware stack features a custom AI chip created in collaboration with AMD, and other companies claim to be working on similar projects. This objective will also be pursued by startups like Graphcore in the UK, Aurora in the US, and Cambricon in China. Nearly every major tech company, including Apple, Amazon, Alphabet, Facebook, Microsoft, and Alibaba, is creating internal designs for its own exclusive neural-net-based AI chips. Over the next five years, these all-encompassing AI chips may seriously threaten the top auto-grade chip manufacturers like NXP, STMicroelectronics, Infineon, Mobileye (Intel), AMS, Renesas, Melexis, and even Nvidia once they have the robustness, reliability, and security demanded by automakers.

Legislation and regulatory background

Since 2009, the US, EU, and Japan have formed working groups to assist in developing the standards for vehicle-to-infrastructure (V2I) and vehicle-to-vehicle (V2V) communication that serve as some of the foundational elements for the development of autonomous vehicles.

The US Department of Transportation (USDOT) Research and Innovative Technology Administration (RITA) and the EC's DG CONNECT (Communication Networks, Content and Technology) decided to coordinate research programs because they both strongly believe that the development of connected and autonomous vehicles will have a significant positive impact on society Collaboration is considered to be essential for preventing standardization overlap and accelerating the development of connected and autonomous vehicles. All new models sold in the region are legally required to have the driver maintain constant attention and be ready to take control at any time should the need arise because the most sophisticated ADAS and AV systems currently available in North America only provide level 2 capabilities. Importantly, even when the car is operating partially autonomously, the human driver is still responsible for its decisions.

The rules governing the testing of AV features, however, are getting stricter in the US. In August 2021, the NHTSA finally announced it would investigate a number of crashes involving Tesla’s Autopilot system, opening the floodgates for further clampdowns on autonomous features in the name of safety.

Although Level 3 cars are beginning to go on sale in Europe, with Mercedes Benz’s S-Class and EQS models being launched, their advanced ADAS features will not immediately be made available to the public because the requisite legal framework is not present.

A geopolitical race to dominate the high stakes car industry

Vehicle makers (OEMs) from the US, Europe, Japan, Korea, and China are all adamant about competing in the developing international markets for ADAS and autonomous vehicles. OEMs in these markets have dominated vehicle production for the past century; this industry accounts for a sizeable portion of their economic activity.

As new forms of mobility emerge, OEMs do not want to lose control of this value chain. China sees the emergence of EVs and AVs as strategic inflection points it can exploit to dominate the auto industry, having struggled to compete in the ICE era, despite having the largest and - prior to the slight turndown that began in 2018 - fastest-growing car market in the world. It sees the level 4 autonomy landscape as it develops as being categorically unique and novel – a long play that calls for a lot of patience, money, brand-new automotive technologies, and the kind of support from the government that is a defining characteristic of President Xi Jinping's China. China's Geely has become a key player in the global auto industry.

Rising competition from industry outsiders

For the industry, there is constant and fierce competition. Competition for market share and intellectual property is fierce among traditional automakers, Tier-1 auto component suppliers, internet companies, and ride-sharing platform providers. The Tier-1 auto component suppliers, such as Bosch, ZF, Continental, Delphi, Denso, Magna, and others, have been gradually providing traditional automakers, who had outsourced much of their R&D to them, with ever greater value content for many years.

Car manufacturers have also turned directly to Intel, NXP, Qualcomm, and Infineon, the component suppliers who supply the parts suppliers. The ability to assemble them and their knowledge of how the parts and components fit together give traditional car manufacturers a slight advantage. The Tier-1 suppliers can view the parts configuration holistically and may have more software resources than their auto industry clients. The advancement of semiconductors and the auto-tech platforms that support the entire inverted pyramid are under the control of component suppliers.

Key ADAS tech and applications

Driver health and alert systems. When cars are finally on the road in a highly automated mode, driver monitoring will become even more significant. Hence a focus of development is on camera-based facial recognition technology. Driver alert systems – aimed at identifying signs of driver fatigue – have been around for a while. We have seen such systems being offered by a number of OEMs using different guises, including Ford (calling it Driver Alert), Mercedes-Benz (Attention Assist), Toyota (Driver Monitoring System), VW (Fatigue Detection System) and Volvo (Driver Alert Control). It is likely to become ubiquitous. Given that there are lots of things that can divert a driver’s attention, Magna’s solution comes in the form of a camera-based monitoring system. It detects the driver's eye gaze and measures levels of drowsiness and distraction.

Blind spot detection systems. Active blind spot detection systems warn you when there are vehicles in your blind spot of rearview mirror. This makes traffic situations such as overtaking and lane changes much safer, both in urban traffic and on the motorway. The term ‘blind spot’ refers to the area just to the side behind the car where you may not see another car overtaking either because your wing mirror does not cover this area, as you turn you hear, the B-pillar may obscure your vision. So if your turn signal is switched on, the system will alert you – through an audible warning – not to change lanes. If you don’t plan to change lanes – and your turn signal is switched off – a warning light is permanently on but does not flash and there is no audible warning.

There are basically two blind-spot warning versions available. The first system produces an audible warning when the driver indicates that he or she intends to overtake but a vehicle is travelling in its blind spot. The second version offers a visual warning in the driver’s exterior rearview mirror the moment a vehicle enters their blind spot. These active blind spot detection systems use radar.

Lane departure warning systems. Lane departure warning systems provide the driver with auditory, optical, or haptic warnings, such as steering wheel vibration, offering protection from inadvertently changing lanes. A lane departure warning alerts the driver when the car begins to leave its lane without obvious input from the driver (for instance, when the driver is distracted or is very tired). Currently available lane departure warning systems are forward-looking, vision-based systems that use algorithms to interpret video images to estimate vehicle state (lateral position, lateral velocity, heading) and roadway alignment (lane width, road curvature). A video camera in the rearview mirror (possibly integrated with the rain/light sensors) allows the electronics to track the lane markings on the road ahead. Using this video image, image processing software determines the car’s position in the lane and then compares this position with additional inputs taken from the steering angle, brake, and accelerator position sensors – and whether or not the indicators are in use. If the car begins to drift off track for no apparent reason, the driver is alerted by an audio or haptic warning such as a vibrating steering wheel.

Parking assistance systems. Automated parking assistance systems typically add additional sensors to provide more information about the vehicle’s immediate environment, including the use of side-facing ultrasonic sensors to identify potential parallel parking spaces and the addition of ultrasonic sensors to passive camera-based systems to provide the driver with additional guidance when the automated parking system is in operation – these systems typically automate the steering, but the driver is still responsible for longitudinal control and braking the vehicle to avoid collisions. With an eventual move to fully automated parking systems with accelerator and braking system control, additional ultrasonic sensors may be required at the front of the vehicle to prevent collisions when parking in smaller spaces. Bosch has developed a solution to create real-time maps of available parking spaces with the help of wireless sensors installed on the pavement. These sensors recognize whether a parking space is occupied or not and share this information via the Internet. In the future, even cars passing by available parking spaces will be capable of reporting them, says the supplier.

Radar and camera sensors. Early radar-based ADAS systems mainly utilized 24GHz narrowband (NB) and ultrawideband (UWB) sensors. However, the advent of more advanced ADAS functions and the move to autonomous capability will see increasing adoption of sensors with more bandwidth to provide both a wider and longer view range. Additionally, they’ll enable the provision of better image resolution and greater ability to distinguish between objects. Higher frequency radar systems in the 77-81GHz range (79GHz band) also have the advantage of being much smaller and allowing a reduced number of sensors. A sensor in the 79GHz band is a third of the size of one operating at 24GHz. Two 24GHz UWB sensors and one 24GHz NB sensor, as might have been combined in the past, can now be supplanted by one 77GHz mid-range radar (MRR) which will provide a much wider range of vision.

Due to the advantages of 79GHz band sensors the industry is moving towards the wholesale adoption of the frequency for ADAS and autonomous applications in the auto sector.

Lidar systems. As with radar, Lidar employs the Doppler effect but through the use of laser light rather than microwaves. Lidar is an expensive solution, especially when a 360-degree scanning is required. Where Lidar has been employed cost effectively is in AEB where typically just three laser beams are used, however many in the industry believe cameras provide a more sustainable long-term solution.

To try and reduce cost 145-degree horizontal scanning-beam Lidar is being developed, which would then require additional sensor technology such as cameras. Accepting the use of Lidar in this more limited way is the precursor to the commercialization lower-cost solid-state Lidar.

Credit: